In May 2016, UNISON ran an indicative ballot of ambulance staff in England in which 41% of members participated.

Participation relied on members clicking a private link sent to them via email or text message – there was no paper format or direct link through a website.

If we only count turnout for those members we managed to get the private link to (i.e. those with either an email address or mobile number), then turnout rises to 53%.

Considering the review and pilots of online voting due to be undertaken as a result of the Trade Union Act and the need for us to achieve over 50% turnout in many of our ballots because of the same Act, it is useful to review how this campaign was run to identify:

- How it achieved these relatively high levels of turnout for a consultative ballot;

- How useful it was in preparing us for any potential statutory ballots held online in future;

- What could be done differently to further increase turnout, run the campaign better or help to prepare members for any statutory ballots.

- What did we do differently in running the ballot

- What worked, part 1: Collecting emails as part of the campaign

- What worked, part 2: Using digital tools to make the ballot secure, anonymous and trustworthy

- What worked, part 3: a comprehensive central email and SMS programme

- What worked, part 4: reporting and monitoring responses

- What didn’t work

- Some lessons learned

What did we do differently in running the ballot

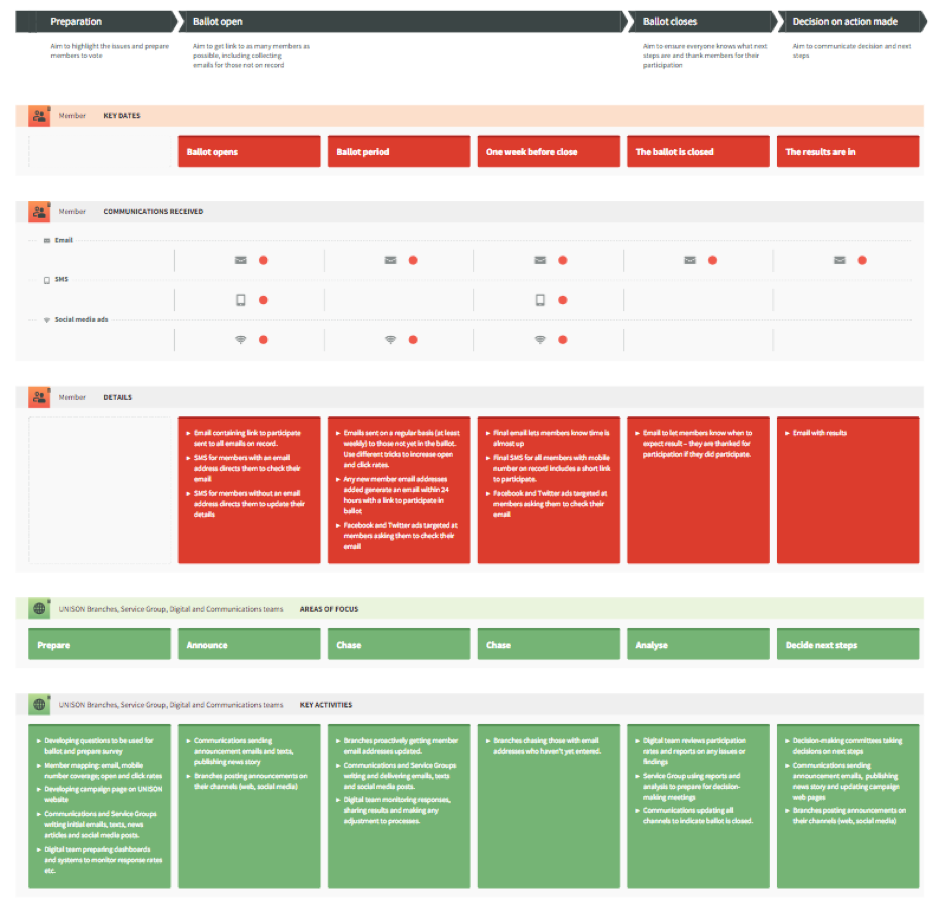

A core difference in the campaign with other consultative ballots was the split of responsibility between UNISON branches and the UNISON national team.

Branches did not need to spend time administering the ballot directly or sending emails and text messages. Instead they focused their on-the-ground organising efforts on getting members email addresses so that they could participate in the ballot, or persuading members who had received links to participate.

UNISON Centre, in particular the digital team, handled the way the ballot was run – in consultation with the service groups and regions. We used a new set of digital tools to track who had responded (but not how they responded, as the ballot was anonymous). We were therefore able to automate emails and SMS messages so that they only went to those who hadn’t yet participated.

What worked, part 1: Collecting emails as part of the campaign

Apart from the overall turnout being high, the number of ambulance staff with an email on record rose from 66% to 73% – that’s 1,365 new emails added. This 7% increase is a major increase that will allow for better communication with members in future, including in the case of an actual ballot.

It is a testament to the hard work put in by branches that the ratio of emails increased so far in such a small amount of time.

What worked, part 2: Using digital tools to make the ballot secure, anonymous and trustworthy

The digital team were able to call on new digital technologies we’ve started to adopt to make the ballot more effective and easier for members to participate in:

- We were absolutely certain each member had only one vote. This was all tied to data held in our membership systems, to ensure our turnout and response rates are representative.

- We ensured the vote of each member was anonymous, to encourage them to participate – yet we could still see whether a member had actually voted or not (just not what they said).

- We ensured the design and address of the ballot looked as trustworthy as possible. The ballot took place on a research.unison.org.uk domain and the page in UNISON purple and green.

What worked, part 3: a comprehensive central email and SMS programme

The ability to deliver a full email and SMS programme to prompt people to vote helped to getting the fullest possible picture of feeling amongst members.

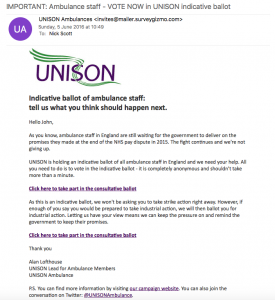

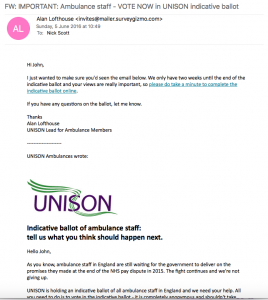

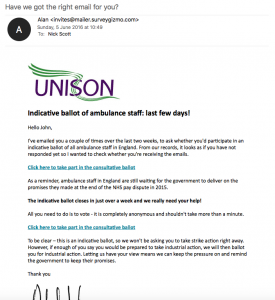

- Communications were only sent to those who had not yet participated, meaning that we could send a number of emails without annoying people who had already voted by further communications from UNISON. Each email we sent led to at least a sizeable increase in turnout within two days of being sent.

- Each email was personalised and tested different subject lines or senders. This helped to prompt people who might otherwise have ignored them to open emails.

- We also sent text messages. These took account of the fact some we have some members with a mobile number but no email on record, and other members who have an email on record but don’t check it, when they do check text messages. In general people find text messages more intrusive than emails, so we were careful to limit the number we sent. We only sent a link to vote via SMS once, towards the end of the campaign and once a number of emails had already been sent. Earlier on we had sent text messages to those members we had a mobile number on record for but no email address – these text messages asked them to use our Quick Online Contact Update tool to add an email address for us to send their ballot to.

What worked, part 4: reporting and monitoring responses

By using new digital tools linked to our membership records we were able to develop a very accurate and completely up-to-date view of participation.

- Branches were sent information on response rates, and could use this to identify where their effort could help to increase participation: we had had the best response where local branches worked with us and contacted their members in person to encourage them to respond.

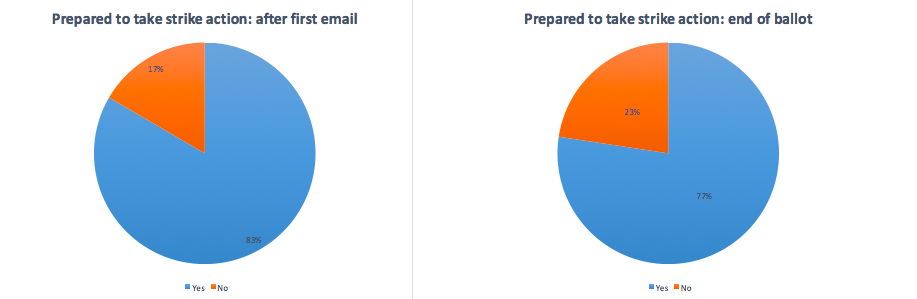

- We were able to see trends in the data collected that can inform decisions on future action. If watched over time, you can see the numbers for and against strike action changing – presumably because people who are most inclined to take action are those that are most compelled to vote quickly as they are more committed to action. In the first week we had a much greater percentage in favour of strike action – which dropped by 5 percent as we managed to prompt more members to participate.

As this is one consultative ballot for all members, it is very simple to also identify what might make a difference to turnout rates in future statutory ballots and help achieve 50% turnout. For example, we asked members to self-declare their job roles, which will help to identify different roles had the highest response rates or feel strongest about action and perhaps lead to more targeted industrial action in areas where feelings are running strongest.

- Member replies were dealt with or triaged by UNISONDirect, who directed people to branches, the digital team or the service group for response. This makes emails more personal to our members: we are conversing with them, rather than just talking at them. A number of members were persuaded to vote after having their queries responded to. We were also able to hear feedback from members who may not be reached in other ways as they were not engaged: for example, as we sent more emails the number of responses we received from people who didn’t believe the ballot was for them as they weren’t in “frontline” roles (paramedic, technician etc) increased.

What didn’t work

By far the biggest problem we ran into we discovered within the first week. This was that the system we had chosen to run the ballot was being blocked by many email providers. SurveyMonkey has been used by UNISON for some time for surveys and consultative ballots but it appears that lots of our employers block both emails coming from SurveyMonkey and the SurveyMonkey webpages themselves.

As a result the digital team had to set up a new version of the ballot in a new provider (one we’d been planning a move to anyway), SurveyGizmo. This allows us a lot more control over our emails – we can link to ballots or surveys that live at research.unison.org.uk rather than one owned by SurveyGizmo. We can also make emails come from unison.org.uk addresses, though we weren’t able to set that up in time for this ballot.

The sender for the first text message (for members who had a mobile but no email) was ‘UNISON’ rather than a mobile number. We should instead have sent from a mobile number and said in the text message itself ‘This is UNISON here’. This would then mean anyone could directly reply, which they couldn’t on the first text. When we changed our approach on the second text message we got a lot of replies from people who had moved on from their jobs and could therefore be excluded from the ballot – however they could have replied on the first ballot if we’d got it right the first time around.

Some lessons learned

Overall the first trial of this new integrated approach to consultative balloting was a success, despite a few problems. However there are also many areas where we could improve things in the future.

- Members were only able to enter the ballot by clicking a link we sent them, which made it impossible to give a link to enter on websites or social media. It could be possible to setup an entry route where a member would be able to access the ballot directly on a website after providing some data to identify themselves (membership number or national insurance number, for example) and some data to verify they are who they say they are (date of birth and surname).

- We weren’t able to get the system set up that would allow us to send an email invitation from any address we wanted in time. In the event it came from a SurveyGizmo email address but was labelled as digital@unison.co.uk. This means people thought it was spam and deleted it. In future we should be able to send emails from the person who is named on the email (though we can collate responses centrally).

- We could increase the automation of communications to make everything run seamlessly and to a highly specified timeline, so that all branches know what is happening when.

- Interestingly, those places who had recently balloted members over workforce issues found that their response rate was lower – this could be down to people thinking “I’ve already been asked that… “ or something similar. We should clear the decks in terms of other communications around the time of consultative ballots, to avoid confusion among members.

- We only started investigating the range of data we could make available to everyone involved in running the ballot (service group, regions, branch officers) late on. This meant that some opportunities to increase turnout based on the data might have been lost. Developing and publicising dashboards from the start is key.

- Another area where more information would be useful is in developing the overall plans in advance. The process outlined in this blog was developed in a real hurry at the last minute and therefore a lot of the planning was on-the-fly. Not knowing what was being sent when meant those involved in running the ballot felt like they had lost some control over the process. Now we have done this once, we should be able to put together a detailed timeline and set of actions and responsibilities. In fact this has been partly developed already, see below.

- We might have achieved over 50% turnout if we had more emails on record. In future we should have more time to get emails as part of the process, and perhaps look at how we can support branches to use technology such as phones or tablets to collect email addresses where they don’t exist.